Our team has had mixed feelings about AI over the last few years — from unbridled enthusiasm and awe to deep dread and “What is my value now AI can do my job?”. Much of that has faded, dulled, to an extent, through extensive trials, tribulation and testing. Serious security, privacy, environmental, and intellectual property risks exist, and this article aims to dispel some myths and set a framework for when and how we use AI.

Key takeaways

- AI can enhance creativity, efficiency, and problem-solving when used thoughtfully.

- "AI" is wildly hard to define, we’re primarily talking about Generative AI. In 2 month's it'll possibly be 100% different as it's moving so fast

- Transparent, client-opt-in adoption builds trust and safeguards values.

- Human oversight is essential to maintain quality, ethics, and accountability.

- Alongside environmental and data privacy risks, we also pay close attention to fairness and bias.

- AIs are impressive, but without careful interrogation, can trick you very quickly down a path that wastes time.

- When you become a willing participant, you have choices about what you input and what you do with the output.

- To help us and our clients navigate the space we’ve summarised the modes of use in our AI Usage Policy.

- Balancing innovation with environmental and social considerations ensures long-term benefits for people, business, and the planet.

"AI" is wildly hard to define, we’re primarily talking about Generative AI

AI is one of the loosest terms going around, how it's utilised is all over the place, and its definitions includes 16 pages of terminology from the ISO / IEC Standard.

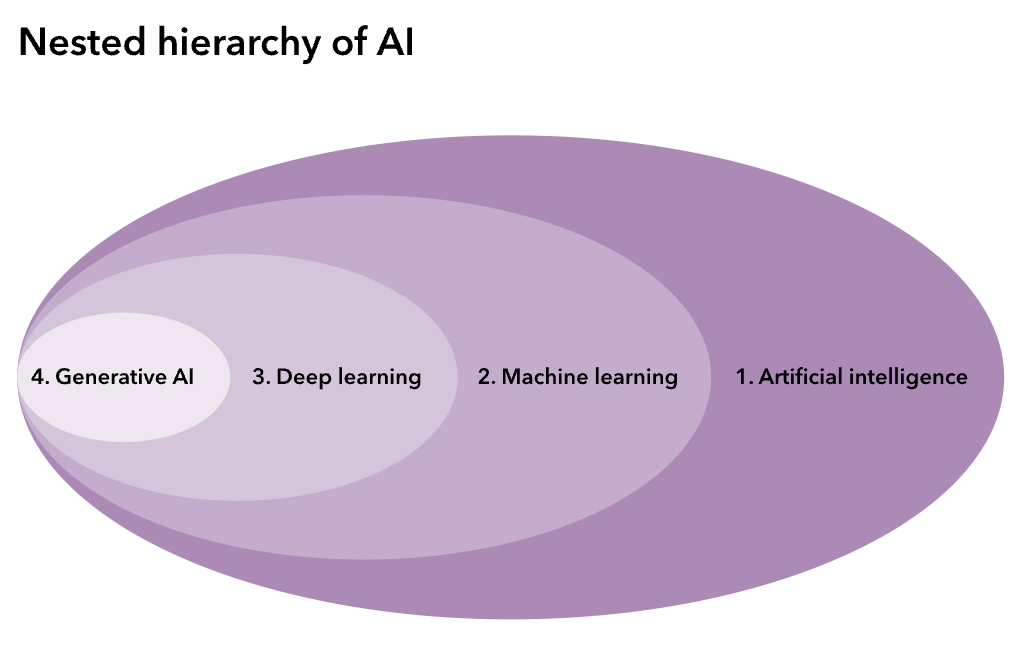

In many instances companies referring to AI are just talking about predefined logic, (i.e. if this then that, but much more complex), whereas AI ultimately learns by itself from once off (data fed into a model) or iteratively through feedback. Within AI there is machine learning (a wide array of technical techniques), deep learning (a sub-set of machine learning aiming to mimic human brain learning process) and Generative AI (where deep/machine learning is applied to create outputs).

Basic terminology you may hear about

- Hierarchy: AI, Machine Learning, Deep Learning & Generative AI

- Learning methods: Supervised; Unsupervised learning

- Function & Process: Forecasting & regression; Classification; Clustering; Natural Language Processing; Image recognition & computer vision; Recommendation systems

Our friends Portable do an excellent job in their comprehensive "Policy for teams using AI" report, or if you want to drive deeper check out ISO Standards Terminology / Definitions or OECD’s Framework for Classification of AI.

Our quest for meaningful positive impact

We purely exist to “Unlock the potential of the new economy to leave behind a better world” leading to daily questions around how we can be more productive, more valuable, more impactful, and more efficient. A better world means doing things with integrity, in our approach, the work we deliver, how we position our brands but the tools we use.

Do the tools smash the planet with carbon [Hi, Amazon]? Are they sucking up data and manipulating mass populations [Looking at you Meta/Google]? Are they generating profits that are fueling war [C’mon Spotify]? Let alone the basic fact that AIs learnt from (read: stole) intellectual/creative property of most people on the planet, and generates outputs that would normally be considered IP/copyright theft.

People and the planet are physically impacted by AI

There is a litany of evidence that AI — from hardware to data centres, and from model training to usage — can cause tangible physical harm to people and planet. Studies estimate that even small actions, like drafting an email via AI, can consume several litres of water, with total AI water usage predicted to reach trillions of litres per year in the near future. CO₂ emissions are projected by some researchers to increase measurably in the US purely from AI growth. One data centre can use as much water as 100,000 homes

- Writing an email with AI consumes 3 liters of water [2024 Frontiers in Sustainability], and AI water usage is predicted to reach 6.6 trillion litres per year in 2027 [2023 arXiv – Making AI Less] “Thirsty”]

- CO2 is projected to increase by 0.4-1.9% in the US purely from AI [2024 Frontiers in Sustainability]

- One data centre uses the same water as 100,000 homes [2024 ING Think]

- Data centres emit PM2.5 aka bad stuff like Nitrogen Dioxide and Sulfur Dioxide causing asthma, heart attacks and cognitive decline [2024 JLARC - Data Centers in Virginia, 2024 arXiv - The Unpaid Toll]

- 40,000 kids (7 yrs old and up) are involved in cobalt mining in the DRC [2024 Save the Children]

But we’re not idealists. Most of these tools are the best, by an extreme margin, at delivering outcomes. So a deep internal conflict arises and there’s no clear line between productivity and ethics. Especially when the impact of our work can have a direct positive impact on systems [RIAA], planet [ATEC] and individuals [CPSN].

A trade off decision

For example we conduct detailed market research through in-depth 60-80 question surveys. Through detailed analysis we can identify how our clients can improve, what they should focus on, which groups of people are most interested or those that need convincing. It’s very informative and valuable. The most effective way to collect diverse responses by 10-20X is Facebook, specifically their in-feed ads, old school, but it works. The data quality is high, the people are real, and we’ve tried so many other ways to get these responses and it’s just unviable. A real world trade-off we wrestle with daily.

It comes down to a case-by-case subjective, but educated decision, and we’ll be wrong.

AI Bias & Fairness

Alongside environmental and data privacy risks, we also pay close attention to fairness and bias. AI systems trained on skewed datasets can unintentionally reinforce stereotypes or under-serve marginalised groups. This is especially important in areas like recruitment, content guidance, or client-facing outputs. That’s why we maintain human oversight, diversity of input, and context awareness as essential safeguards.

Trials and tribulations

Now, when it comes to AI, the question of productivity, it’s extremely unclear, varies day by day, task by task or maybe the position of Saturn in relation to how well our tomatoes are growing.

We’ve carefully incorporated AI into various services we provide, tried it extensively as individuals for personal support, and listened to the honest voices in the space - we’re trying to cut through the hype.

From summarising a meeting, to turning a bunch of notes and thoughts into a coherent email, drafting messaging or brand strategy, reviewing code, analysing data, designing logos, making social tiles, generating websites, educating us on a vast array of topics.

Its first or second attempt at a well crafted prompt can be mind blowing, but then cracks quickly appear. You click the first 5 specific references and 4 of the links don’t exist, or the content of the article doesn’t cover the topic at all or worse specifically contradicts the AI’s output.

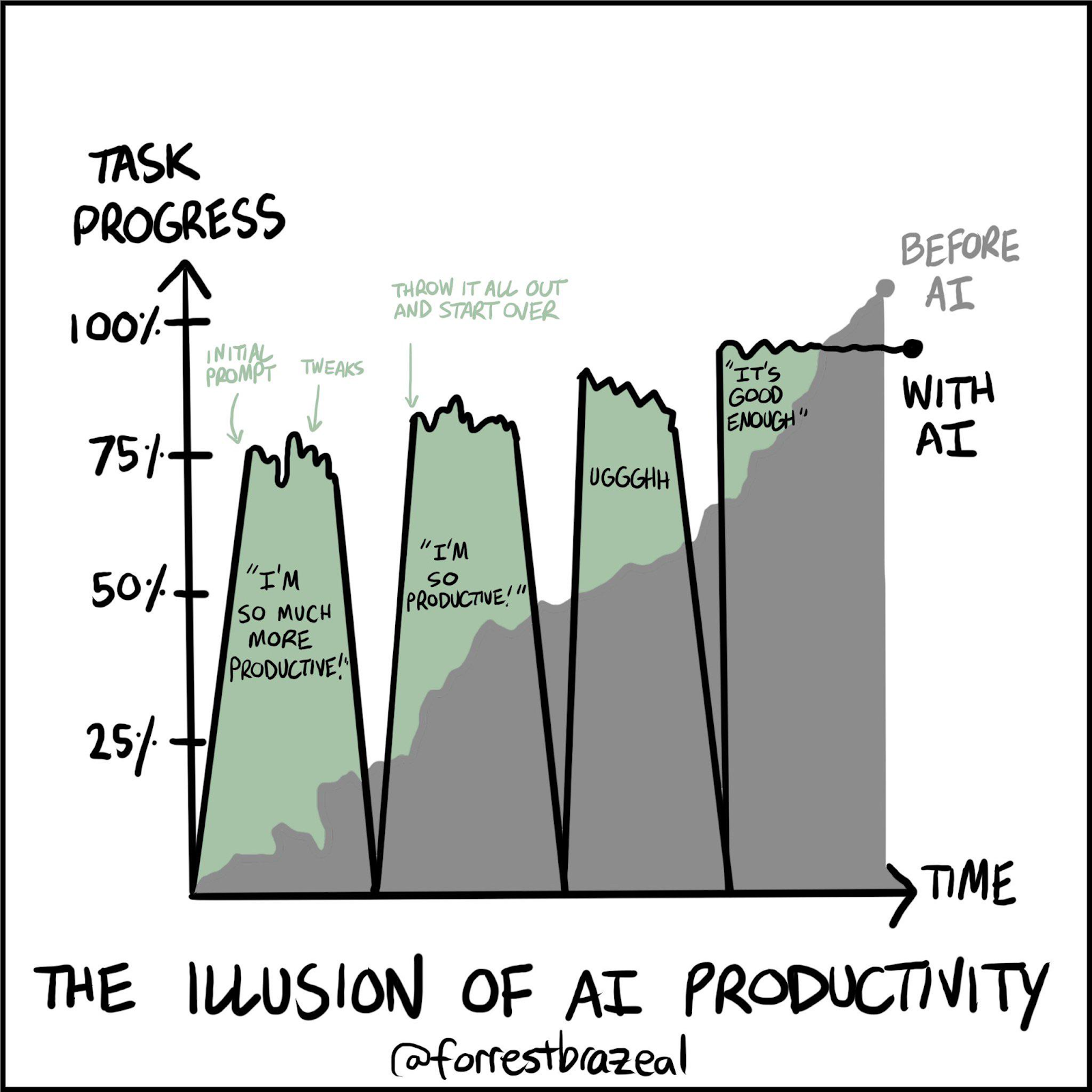

The illusion of productivity

AIs are impressive, but without careful interrogation, can trick you very quickly down a path that wastes time. I’ve personally been playing with it in a little app, to learn a new programming language and tools, and see how well AI’s work. I’ve used the premium models, paid plans, local models, giving lots of context & prompts, used best practice prompt templates and the like, and basically no matter what you do this can be the feeling of using AI.

It quickly feels like you’re almost there, but then somehow the progress slips through your hands.

I’ve personally lost hours and hours of time going in logic loops, creating new bad code, having to start again, unpick things. It’s real. (Note: I’ve found the right approach that is working well, but it’s not ‘press a button and magic happens’. This article isn’t about how to use AI well.)

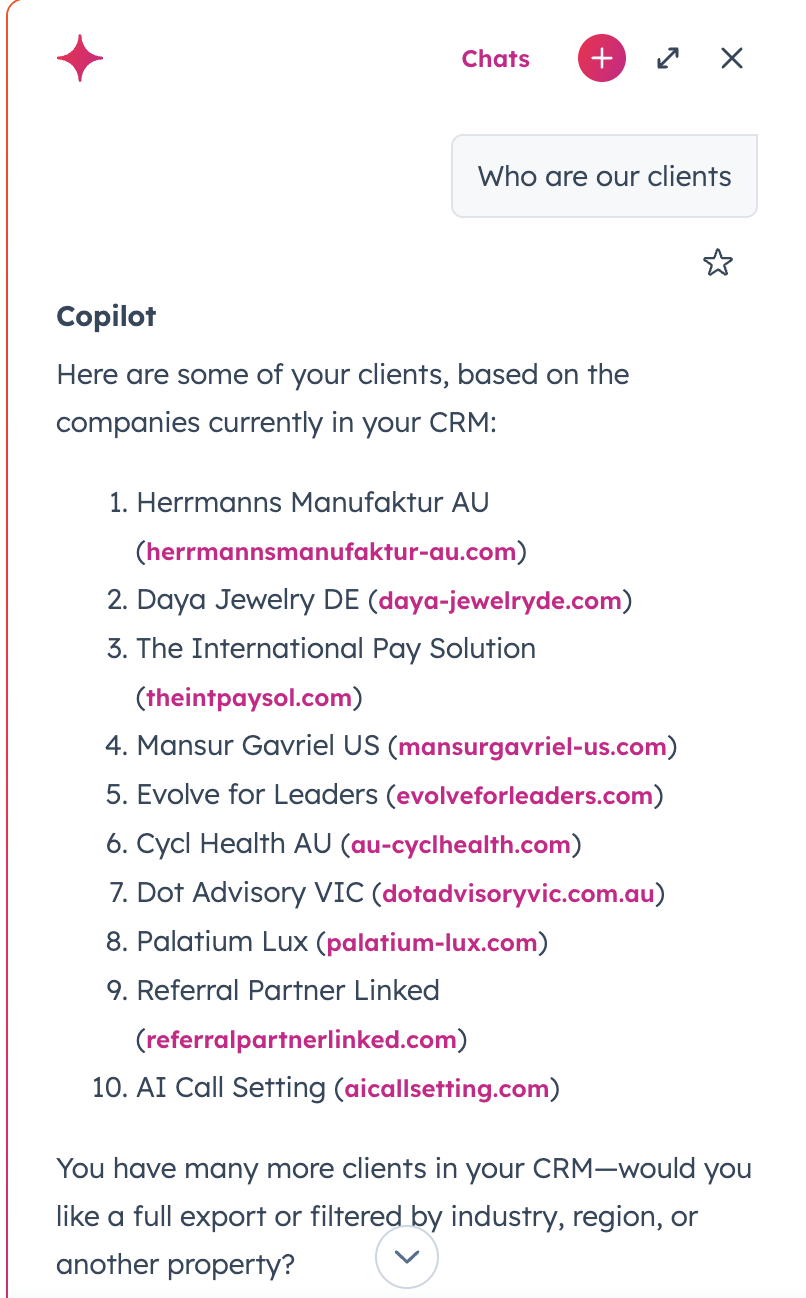

Here’s an example of a basic search in our CRM and many companies listed don’t exist in our account, never heard of them, one is our amazing accountant (not client!).

A crude ranking of risk and value for some tools we’ve trialed

We are talking theoretical risk and actual value from our experience

It can be thorny too.

Beyond productivity it’s not all roses - we (humans including the AI engineers) don’t know how these models actually work. Massive leaks through AI from Samsung, Microsoft, Zillow, McDonald's estimated at over $1B in losses and hundreds millions of individuals personal information shared. [TechCrunch]

"It details over $1 billion in documented losses from AI-related breaches, including Samsung's semiconductor code leaks, Microsoft's 38TB data exposure, and Zillow's $500M algorithmic failure. The report covers legal precedents establishing company liability for AI outputs, vulnerability rates in AI-generated code, and the McDonald's recruitment chatbot breach affecting 64 million applicants." [Wiz Blog, insideAI News, SecurityWeek]

Without labouring the point, what you put into AI can be accessed by others, in theory. This also applies to lots of technology, which we’ve seen with major breaches in federal government, major insurers, telcos and airlines.

The risk isn’t new, it’s just billions of individuals are now willingly sharing deep dark secrets of their own or their company.

Beyond sensitive information, inaccurate responses, unchecked can directly hurt business. “Security researchers consistently find that 30-50% of AI-generated code contains exploitable vulnerabilities.” [arXiv]

In less direct ways, it could misinform you about your data, (I’ve seen it completely misinterpret and summarise data or market research), so you make decisions that aren’t well informed and send you down the garden path. (Not sure why garden metaphors came out in this section. I’m behind on weeding our garden, maybe that’s why?)

You are unwillingly participating.

The AI avalanche is overwhelming, it’s everywhere and every tech company is fighting for claims to have the most active users to skyrocket their share price, get more funding and realise the $4.8trillion opportunity.

It’s in Facebook Messenger. All over your phone. Listening & watching your meetings. Reading all your emails. Accessing all your documents. I’m not talking in theory, it’s literally penetrated almost everything..

I’d say over 50% of the meetings I host on Google Meets or Zoom, the first participant is some friendly AI app (Otter.ai or Zoom itself) that politely asks to join to summarise the meeting. Until now I thought “One of our clients must want to use that app to help them, so I’ll accept it.” but we realised as a team that’s hugely unethical, AND when we ask nobody actually wants it there.

What’s more hilarious is when I have ‘tried’ them the notes were accurate and amazing, but useless and nobody used them. That’s because everyone was taking their own notes or just using their brains, and taking responsibility to be present and accountable. So in practical terms it didn’t help with productivity at all.

They are watching and recording your faces, conversations, screenshared documents, chat messages with links to important information. And we’re all unwillingly let it happen.

A more perverse version is happening in business software, they are automatically turned on, allowed to do as they please.

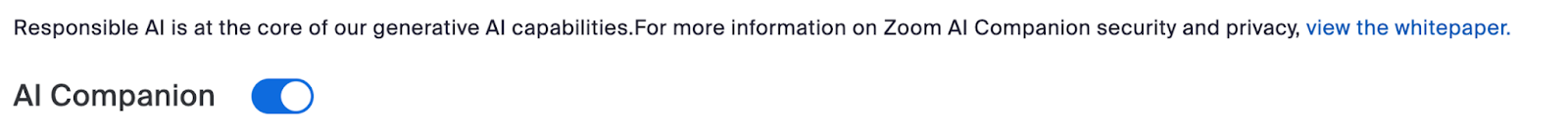

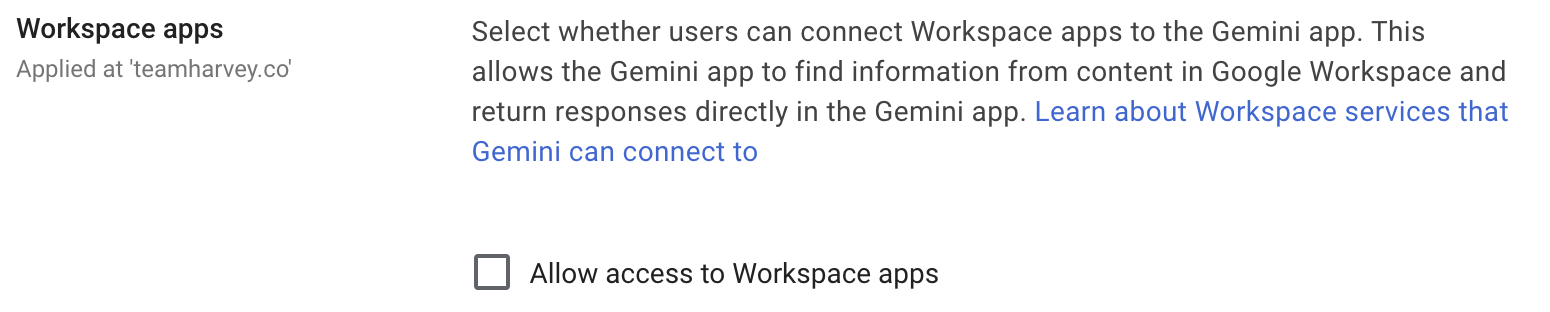

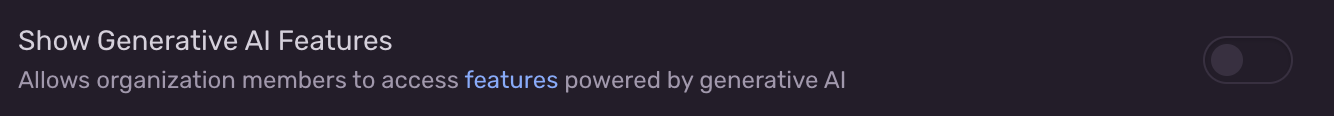

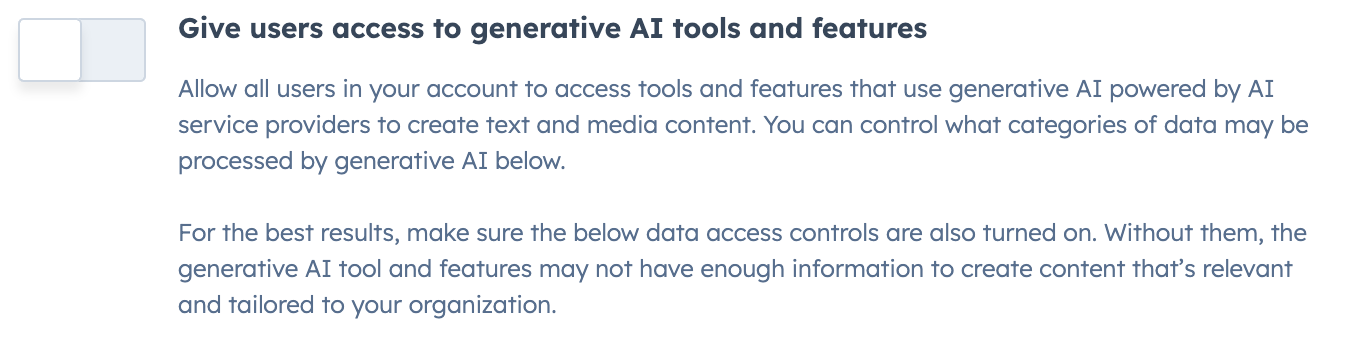

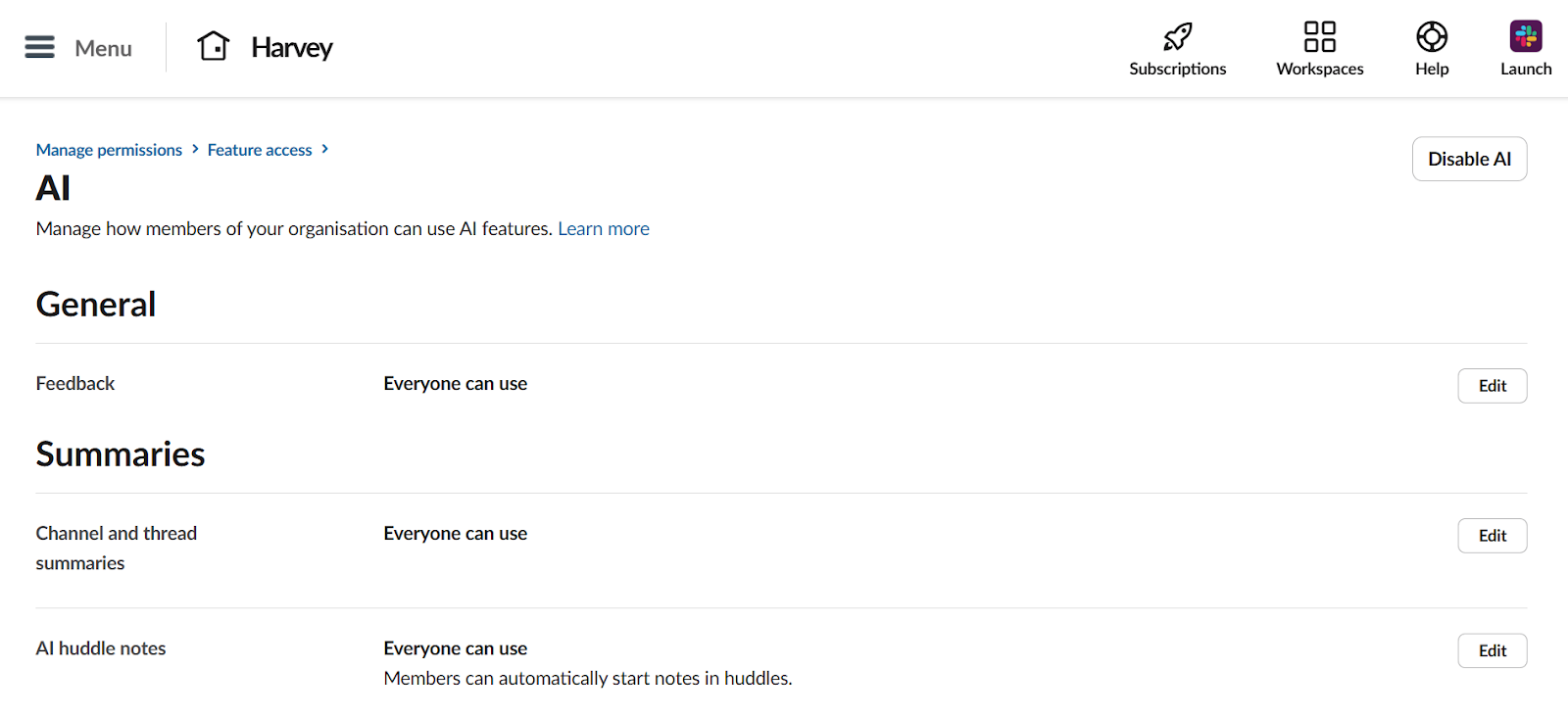

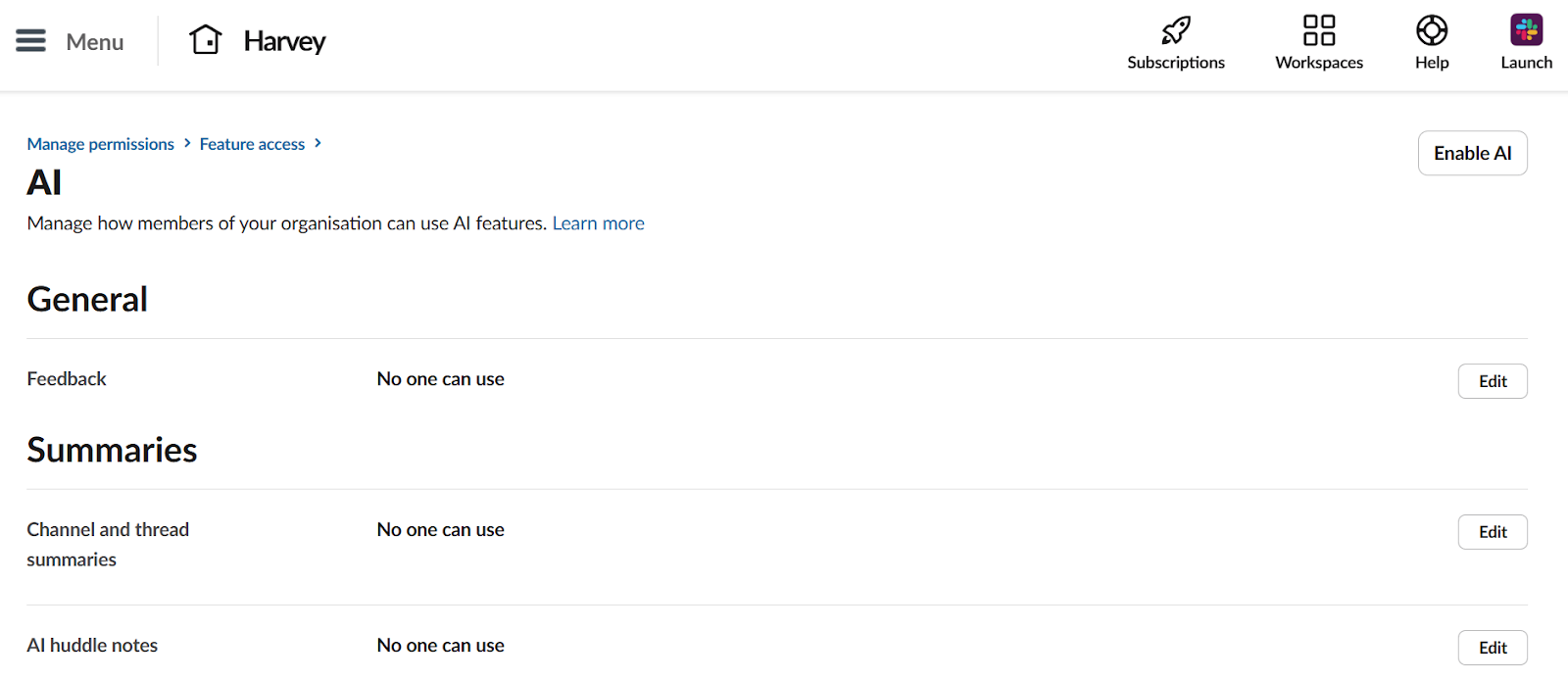

So we disabled them all by default

So we went through all of our apps and disabled them. Most notably Google Workspace (Docs, Gmail, and more), Slack, Hubspot & Zoom which house our most sensitive information and in all 4 instances the benefit is zero or extremely low.

Meetings: Zoom

Documents & Email: Gemini in Google Workspace

Code logging: Sentry

CRM: Hubspot

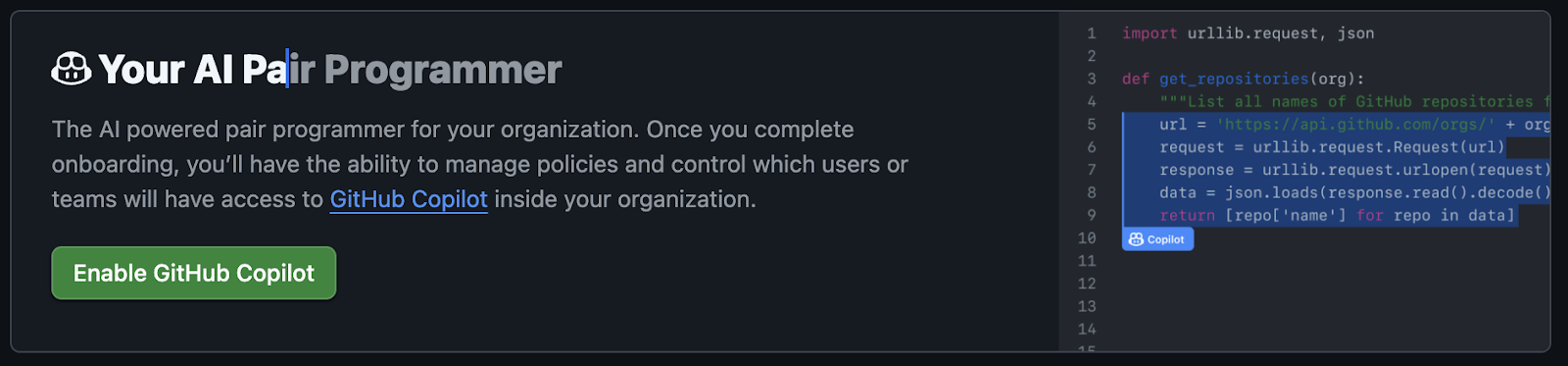

Source control: Github

.png)

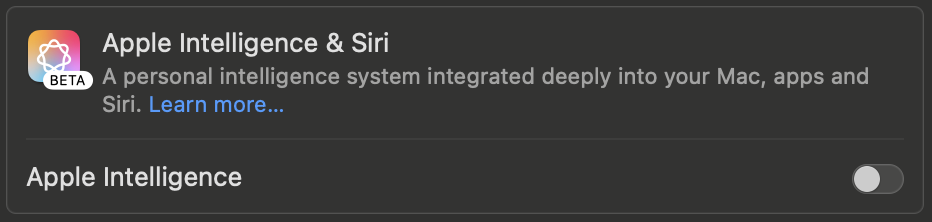

Operating System: Apple Intelligence, Windows & Android

Interestingly we have Alexa & Google on our speakers at home and I know they’re listening, but for some reason have left them on, I am rethinking this right now.

Analytics Platform: Microsoft Clarity

.png)

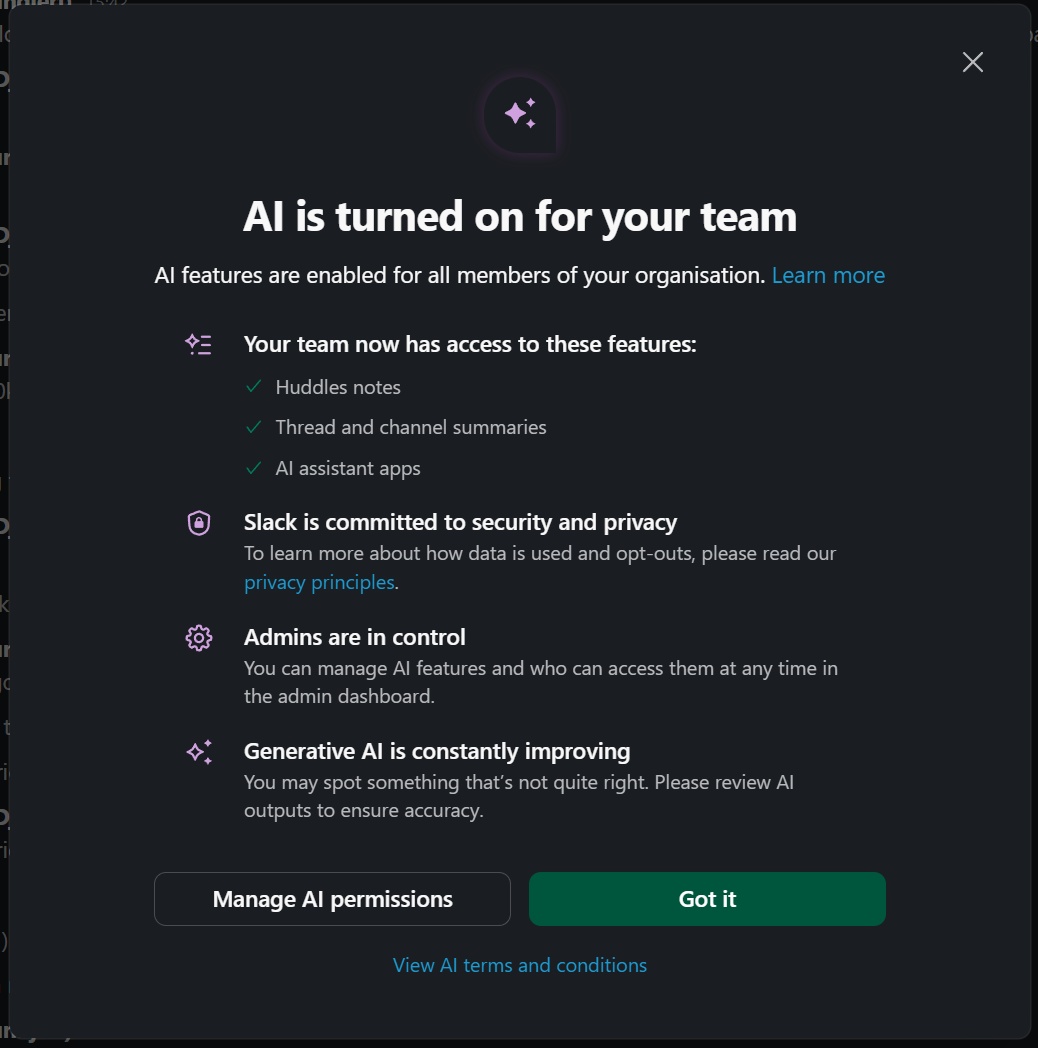

Messaging App: Slack

Today, literally after writing this first draft, Slack (one of our favourite apps, but now owned by Salesforce, less favourite) turned on AI by default, with lots of lovely disclaimers. This was actually quite clear, whereas Google & Zoom were very difficult to find and unpick.

So we clicked “Manage AI permissions”

And disabled it by default. We’ll come back to review the options if it hits the right mark.

They have amazing (sarcasm) policies that clearly state they wont, will or maybe will, use your data. But beyond those ambiguities, they don't know how AI is using the data.

Become a willing participant

When you become a willing participant, you have choices about what you input and what you do with the output. For us, that includes our clients - as we act with their information, code, and marketing, we give them the option to direct how we participate. You can see exactly how this works in practice in our AI Usage Policy.

To help us and our clients navigate the space we’ve summarised the modes of use.

Productivity estimates are illustrative, and as outlined above, depend. This indicates when things go well, we do the right thing and they’re having a good day. Arguably they are infinitely productive because something the user couldn’t fathom, can now do it in minutes.

^Sensitive information: In itself this is ambiguous, and broad ranging from extremely sensitive system security keys and passwords to your semi-sensitive brand strategy. How much harm could come to your business if it was usable by the public or competitors?

*Personally identifiable information: Technically it’s anything (for example 2-3 pieces of information like DOB, address and name) that can be used to impersonate or conduct fraud, but we think of it as including health, financial, information. Learn more here

When we use various modes of AI.

Examples of work where do or don’t use AI

The level of utilisation of AI in types of work

As a general guide, it depends on the data / inputs we’re using and how we’re using the outputs.

Full conservative, cautious mode is “No AI” whereas more risky is ”Assistant (Third-party)”.

Below is our default position and each client can choose to dial up or down.

So it’s a crazy era we’re in. There are real risks of damage to your organisation, and your clients / customers. The impact & ethics of the AI supply chain is very questionable. We’re all auto-opting in.

Ultimately, we’ll keep using these tools where they add real value - but always with caution, client opt-in, and a human-in-the-loop mindset. For the exact modes, boundaries, and safeguards we follow, see our AI Usage Policy.

.jpg)